The greatest challenge of autonomous driving will be the replacement of the human power of deduction with artificial intelligence. At the moment, a machine cannot be taught to make purely abstract decisions; instead, all the reaction models are the consequence of pre-programmed solution models.

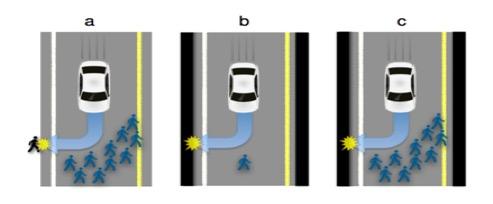

In problematic situations, the machine is programmed to naturally select the option that causes the least damage, in other words to avoid collisions and bodily injuries, which is called a win-lose situation. The challenge is situations where none of the assumed options create a positive outcome, in other words so-called lose-lose situations. How to programme a machine to solve an ethical problem, and who decides how to solve it?

Let’s imagine a scenario where a child suddenly jumps in front of the car, and the only way of not hitting the child is to drive the car off the road which, in turn, has a high chance of causing the death of the driver and other passengers. How should the car be programmed to react in such a situation? Should it spare the passengers by killing the child or kill the passengers and, thus, save the child? The machine may also face situations where it uses other viewpoints to make the decision: old or young; one person or several people?

Figure: Ethical cars

Source: MIT Technology Review.

Current legislation is not very helpful in terms of ethical choices. The Constitution of Finland stipulates that each person has the right to life and integrity (section 7) and that no one shall be treated differently from other persons on discriminatory grounds (section 6). In light of this, it is impossible to decide who is hit or how the person to be hit is selected.

Numerous discussions find the most ethical solution to be a model where the vehicle should continue on its selected lane, in other words hit the people who would be directly in front of it. This model would not consider the personal characteristics or number of people. This is ethically based on the perspective that the better or at least not quite as bad an option is to let death happen, instead of actively selecting a person who will die.

Which ever solutions are decided upon, they must be predictable and enable consistent compliance. Such decisions cannot be made by individual businesses or programmers; ethical logic must be based on governmental decision-making and legislation. A separate challenge is posed by situations where different countries would apply different ethical perspectives to solve the problem. Should the features of the car change according to the country in which it is used at any time?

In collisions, the most likely option is a solution model where the passengers in the vehicle are protected at the expense of others. Self-driving cars would probably not become a huge commercial success if they were programmed to turn against their owners in emergencies. On the other hand, a setting can be programmed in which the passenger gives their consent to this option as a potential solution. Such consent would be required from each passenger for each individual drive. The question, of course, is: who among us would be prepared to give such consent?

The increase of smart features in cars puts pressure on existing legislation. At the moment, the driver is responsible in each situation, regardless of assistive functions. An example of this is the accident in Florida which was caused by Tesla’s Autopilot function and resulted in the driver’s death. The National Highway Traffic Safety Administration of the U.S. did not find Tesla liable for the fatality that was caused after a truck suddenly turned to oncoming traffic as the driver was not monitoring the traffic as actively as instructed by Tesla but was instead watching a Harry Potter movie with a DVD player, according to witness testimonies. With the Autopilot function, the driver is liable for reassuming control of the vehicle in hazardous situations. In the United Kingdom, legislation specifically states that the driver is liable for any actions performed by the vehicle (the Road Traffic Act, 1998).

In Finland, valid legislation offers some answers to the question of liability. According to the Finnish Product Liability Act, compensation shall be paid for an injury or damage sustained or incurred because the product has not been as safe as could have been expected. According to the same section of the act, in assessing the safety of the product, the time when the product was put in circulation, its foreseeable use, the marketing or the product and instructions for use as well as other circumstances shall be taken into consideration. However, section 2 of the same act excludes liability for damages when the damages have been caused by the vehicle to the vehicle itself.

If the driver’s role in the vehicle becomes similar to the role of a passive passenger, should the driver’s responsibility be adjusted accordingly? Can a person travelling in a self-driving car be compared to a passenger in a taxi? Could the passenger’s responsibility only be actualised in situations where the passenger has, through his or her own actions, caused a disruption that can be considered as impacting the actions of the driver? Can this analogy also be applied to other offences carried out by a vehicle? Tesla’s Autopilot programme can be set to knowingly drive over the speed limit which, under certain conditions, can be a maximum of 10 km/h. This is considered to increase driving safety as the car adjusts to the speed of other traffic when people are generally driving slightly above the speed limit.

It is very likely that external operators will supply the software to be installed in car manufacturers’ vehicles in the future. Will connected cars become computer-like platforms with the software purchased separately? Or will there be one or two large operators in the market whose software will be used by all the car manufacturers, similarly to the phone market (Android, iOS)? Will it be possible to switch the software later? This raises an interesting questions about the liability related to connected cars. What is the responsibility of such a software developer and, on the other hand, the car manufacturer, regarding the functions of the end product?

With a sufficiently long reference period, all the technical components will stop working, some sooner than others. Instead of a linear or gradual breakdown, software components often function in a binary manner. Either they are fully functional or not functional at all, which makes it quite difficult to check their condition. How to detect the likelihood of breaking and how to monitor the condition of these components? What is the liability of an individual component manufacturer if a defect in one part results in more extensive damage? Can the manufacturer’s liability be transferred to the consumer by, for example, requiring the consumer to maintain the components and replace components or software in specific intervals according to a maintenance programme?

It is obvious that the Road Traffic Act, the Motor Liability Insurance Act and product liability legislation need to be amended to better take into account future changes in both technological development and driving culture. The current legislation contains too many questions with no clear answers and considerable problems in terms of interpretation.

The situation makes both buyers and manufacturers uncertain about the division of liability when problems arise. Excessively strict product liability for the manufacturer, automatically making car manufacturers liable for any collision damages and accidents, would probably hinder the development of self-driving vehicles. The state should assume responsibility for solving ethical questions. The manufacturers could then adjust their technical solutions and software to the resulting parameters.

Kari-Matti Lehti

Partner

Mikko Leppä

Partner

Mikko Kaunisvaara

Legal Trainee